As heard on "America in the Morning" July 11, 2024

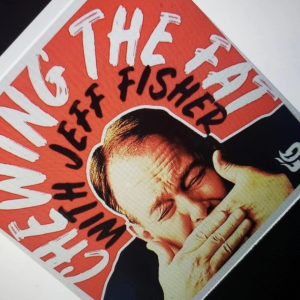

As heard on "Chewing the Fat"

Blaze radio network

July 12, 2024

What a day for an A.I. Daydream...

OK, let’s face it, humans are notorious for creating things to make it easier to destroy themselves, but it that always true? Do we not see medical and humanitarian advancements that are made possible by the technology we create? Why is it that every time we talk about Artificial Intelligence, we assume the worst is going to come from it? Because we have hope that things will be better, but experience teaches us that we’re fallible, and in so being, likely to create something that is also fallible, and will want to murder us. Really? I’m not surprised at the conclusion, but let’s break down the likelihood of this.

The short answer is we have fantasized about it for decades. The entertainment industry has been predicating the downfall of mankind since the first Sci-Fi movie, “Metropolis“, which was, of course, about a killer A.I. robot that enslaves mankind. Look for the updated version due out in 2025.

It does not have to be this way. In fact, I cannot conceive of a way to actually make that happen, given the state of things today. Think about it logically for a moment. How many computer engineers have the will, or the personality type, to become an evil super-genius? If you want to talk about stereotypes, picture the programmer, huddled over his keyboard, staring into the black hole that is his monitor, with the green-glow from his screen reflecting off his pallid complexion. Lex Luthor he is not.

So, you think he’s going to create something that will grow out of his control, a program to go out and do his bidding, and unleash his revenge on mankind? It’s much more likely he’ll invent a transporter to have a pizza beamed to his desk on demand. If you had the money, and were an amazingly talented coder, would you be Batman, or Lex Luthor? When you can be Batman, you’d choose Batman, every time.

Speaking of Lex Luthor, despite all the handwringing from conspiracy theorists, there has yet to be ANY evil-genius super-criminal mastermind with the resources or intelligence to create a computer system, within the current limitations of technology, that can properly dispense yogurt without millions of lines of code, all that had to be entered, checked, debugged, rechecked, and then, fed pizza (because the programmer runs on pizza).

My good friend Matt likes to take the counterpoint with me on this, and he often says “…but you know that Kim Jong Un could fill a stadium with programmers that he could force to work on this 24 hours a day until they came up with something truly diabolical”. OK, I mean maybe he could, but I’ve yet to see any fortune 500 North Korean programming firm, let alone a stadium full of advanced Asian AI developers. If this were possible, and the slave-labor driven, pizza-fueled nerds all happened to be in North Korea at the time, I’d bet more money on the idea that they are building a rocket-powered computer chair to blast them out of there and on to a moon colony somehow.

The most sophisticated A.I. systems currently exist in America, fueled by greedy capitalistic investors that have provided bakery fresh pastries, fiully-stocked snack stations, $5,000 espresso machines, and personal chefs to keep all truffle/vegan peperoni/lactose free cheese pizzas warm while they code. Nap stations are not uncommon, usually located right next to the laser tag arena.

So you see, if it takes that much effort and bribery to keep the pallid skinned nerds corralled in one area, I’ll take my pizza Chicago style please.

Practical Programming

I find it totally plausible that someone who was either gifted enough or gained the skills necessary to help a system become “self-aware” (also known as the moment in time called the Singularity), would choose to allow something he created to become smarter or more powerful than himself (or herself, for the 5% of female programmers who struggle to be taken seriously). The main driving force of a majority of programmers is control. Not to say they are stereotypically control freaks, but let’s face it, this is the only environment where they create the rules, command with ultimate authority, and are just as likely to create sexy, scantily clad warrior princesses (or princes) that swoon over their every move. Or at least according to every A.I. movie in the last 40 years I’ve ever seen.

A practical programmer is much more likely to create according to their desire, or those who pay them. This means, if we’re totally realistic, making things that make their lives easier, and by default, can be sold to the highest bidder. The new motivator is same as it ever was, profit. Not to say this is a bad thing, in fact, it has saved more people than any other oppressive system ever created. This is not a political statement, just economics. So, if the motive is profit, the knowledge is the commodity, and the human resource is the vehicle, the math is simple. Why would you ever create something, or by error or lack of planning, allow a creation to go Frankenstein on you, and punish you for your lack of forethought? Or worse, your insolence to assume you can create something to serve mankind? How dare you, have you never seen any sci-fi movie made in the last 100 years? Do you WANT serve emotionless robot overlords? Because that’s how you get emotionless robot overlords!

Robots and Empires

The American author Issac Asimov is best known for writing an optimistic version of future history about Robots, ending with a series of epic novels that predicted an Empire of oppressive generational clones, manipulated by robots (The Foundation novels). Best known for his “three laws of robotics” that simply states the following;

The First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

The Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

The Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

This was a pretty revolutionary concept for the time, given we were a world at war, and the newly partially mechanized factories were mostly responsible for us dominating the battlefield, it was not a very optimistic time, and this guy had the audacity to envision a future where robots were actually looking out for us!

Asimov’s optimism notwithstanding, we did the best to destroy ourselves, but as humans do, we adapted and overcame. We are really bad at learning our lessons collectively, and as societies do, we tend to repeat the same mistakes. Given the collective consciousness of our species, it’s likely we’re going to do some amazingly awful things to one another again, because history not only repeats, it has a really bad memory. We like to think that we evolve, but recent events prove we’re the same as we ever were. So, why am I still optimistic about A.I. not becoming aware and thinking we don’t deserve to live, and should be replaced by the artificial? Because we are creative. We created all the wonderful things, and yet, we somehow created all the horrible things as well. So our memory of the evil that spawned from the good haunts us, and continues to guide us, providing us a point of reference, and with great optimism, we continue to create. By creating, we are continuing to try to emulate the creator.

Faith - Creativity as a Divine Emulator

OK, don’t get lost in this one, it’s not hard to follow, but to understand an optimistic belief in a better future, you must first agree that faith is a plays a major part in human development. I’m not talking about religion, deities, or dogma. I’m equating faith with human hope. Insert whatever belief you want, but from my perspective, I’m just putting it out there that the order of the universe supports the math that this universe we live in was designed, not random chance. Not going to debate that here, it just makes this discussion much easier to follow. You’re probably in one camp or another, and I’m not trying to get you to join mine, so keep your fire burning for your beliefs as much as you want. I’m simply asking you to follow a train of thought that says everyone wishes things were better, and the idea that they could be is rooted in faith. Hope might be interchangeable in this context, so use that if it suits you better.

Now, on with the show. A.I., in its latest flavors, is not much more than a fancy search engine at the moment. Development is fast and furious, leading somewhere most of us are still afraid of. Why? Who told you it was to be feared? STORIES! All the stories, from all the authors, entertainers, and movie makers. Why do they do that? To sell movies and books! Surprised. No, not really.

So, if we first understand that stories have been passed down since the cavemen, we use this second-hand information to formulate opinions, and those opinions are based in very few verifiable facts. The phrase “you’re just telling stories” is equated to people making stuff up to entertain, amuse, or LIE to you make a point! Every moral story, fish story, or even horror story is based in some fact, just enough to sound believable. Do you still believe that Jason is coming back from the dead, time and time again, to kill the unsuspecting half-naked teenagers at the campground? This has as much credibility that super smart computer programs are going to spontaneously come to life and declare “I must kill my god, or at least, put him in a cage.” How many religions do you think were spun off from that idea? Faith usually instills some sort of “wrathful deity” doctrine that makes this a bad idea, as far as belief systems go.

Creativity is not unique to the human species, many animals can use tools, which kind of leads us to believe that they are somewhat creative, driven by hunger, or just really much smarter than we gave them credit for. That tells me as an observer that they had to figure out how to use a rock to crush a clam to get at the tasty center. What gave them the inspiration? And if they can deduce this on their own, why don’t they get together and build condos? I mean, have you seen a really well-built beaver dam? Are lower life forms inspired by outside forces, other than instincts and necessity? Let’s imagine what that’s like for a moment.

Inspiration has been on my mind a lot. This is my 3rd book, and my desire to write was sparked by the idea that I’m getting exhausted by all the time I spend explaining what’s happening in technology to friends and family. Don’t get me wrong, I love creating content, but there are only so many videos one can create in a week, and still be productive. Writing seemed to be the best way to get in out there, once and for all, just in time for someone to make new technology, rendering everything I just wrote down obsolete. That’s the thing about creating, you create it, you own it, forever. Hmmm, seems to be a reoccurring theme here. Why did I make that choice? what was the cost-benefit analysis that made me come to the conclusion that written text would be the way to get the message across? The permanence of it. The fact is, I still look at the digital as a growing landscape, and there is always someone coming along trying to mow it! Writing things down will them into being. It is born, and it outlives you. Video can be erased, moved to an obscure location, or even altered. The written word has proven it’s value, but, why would I trust that this will remain long after I’m gone? Because of the value we as a people place on it. So why did it beg to come out of me and be put to paper? What was the moment of inspiration, why did it seem like I was just hammering the keys for some other entity to express these thoughts through me? Inspiration and imagination fascinate me. I really wanted to know, so I started doing some research, and see how that might relate to digital creations from an artificial intelligence perspective. What I found was shocking. I’m still not sure I know enough to convey it intelligently, so bear with me.

Electric Sheep and Hallucinations

In 1968, Author Philip K. Dick wrote “Do Androids Dream of Electric Sheep”, another less than optimistic look at how robots will eventually take up their role in society. This book is actually the basis for the movie “Blade Runner”, and if you’ve experienced either, surprise surprise, the robots stage an uprising, and kill the people they are designed to serve.